VP Genie

Usability Testing

DURATION

3 months

3 months

MY ROLE

UX Researcher

UX Designer

UX Researcher

UX Designer

TEAM

1 X Supervisor

1 X PM

3 X UX Researchers& UX Designers

1 X Supervisor

1 X PM

3 X UX Researchers& UX Designers

OVERVIEW

VP Genie is a comprehensive Gen AI-integrated solution under Interco.AI, allows the users to generate film quality video from text, designed to support both the creative and production aspects of filmmaking.

We conducted this usability test on a beta version.

CURRENT WORKFLOW

Input: “create a play for little red riding hood.”

Output: script+characters+props+locations+storyboard scenes

VP genie could generate whole script and visual set in less than 30 seconds, through editing the details of each scenes and visuals, users can get a well-organized and customized video in the end.

Output: script+characters+props+locations+storyboard scenes

VP genie could generate whole script and visual set in less than 30 seconds, through editing the details of each scenes and visuals, users can get a well-organized and customized video in the end.

STUDY SCOPE

Script Creation – Generate detailed outlines, scripts, characters, locations, scenes, props, and gear needed for production.Storyboarding – Visually map out scenes effectively.

BUSINESS GOALS

🎯 Attract more users to try VP Genie’s paid subscription🎯 Usability for All: Ensure VP Genie is effective for those still learning the craft of filmmaking.

UX GOAL

🔍 Identify UX/Product Gaps in pre-production workflows

RESEARCH QUESTIONS

Based on these goals, we came up with 3 main research questions:

PARTICIPANT CRITERIA

We already have another UX team mainly focus on the professionals, so we targeted the business filmmakers who have tight budgets to execute their projects, our product could help them filling in the gaps in their teams and equipment and more. Therefore, here’s our criteria for the participants:

Luckily, we recruited 6 participants from personal networks, slack and UW film department that met our requirements, and here’s the participants’ details:

Participant 1: Cinematographer with 1 year experience in industry

Participant 2: Cinematographer, student

Participant 3: Masters student in media and communication major

Participant 4: Documentarian and editor with 2 years experience in industry

Participant 5: Director in animation, student

Participant 6: Content creator/hobbyist with 1 year experience

RESEARCH METHODS

In the preparation stage, we have designed 5 steps for the end-to-end testing:

Standardized prompt given to participants to

generate a script

Pre-test interview questions

Task-based, moderated, within-subjects usability testing

Observed participants performing given tasks

Assessed task success/failure rate, task error (1-5 scale)

Post-test interview questions and System Usability Scale (SUS)

Task-based, moderated, within-subjects usability testing

Observed participants performing given tasks

Assessed task success/failure rate, task error (1-5 scale)

Post-test interview questions and System Usability Scale (SUS)

The participants were asked to use think aloud methods during the usability testing session, as the researchers, we have to keep quiet during the tasks, no leading questions, no question-answer sessions, we have to pay attention to the participants’ actions on desktop, gestures, expressions, sayings and so on.

STUDY SCENARIOS

To better explore the 3 research questions (challenges, understandings and emotions), we designed an assess standards and 3 test scenarios cover most of the main features of our product to help us track user’s thoughts and actions.

Scenario 1: Create a Script from a Prompt

Overview: The participant starts with an idea written as a prompt in a Word document and uses VP Genie to generate a script, scenes, characters, locations, and props.

![]() Scenario 2: Edit or Regenerate Visual Elements

Scenario 2: Edit or Regenerate Visual Elements

Overview: The participant has generated a script and now wants to edit specific characters and locations to align with their vision.

![]()

Scenario 3: Edit Storyboard

Overview: The participant refines a storyboard, making adjustments to scenes and shots.

![]()

TESTING TIMELINE

We spend 0.5pd for the pre-test, 1pd for the usability testing&observation, 2pd for the assessment and 1 pd for the post-test interview and SUS.

TASK METRICS

![]() We got the permissions from our participants and recorded all the testing sessions, after finish each of the participants, our team will watch the playback and assess the task success and error rate together.

We got the permissions from our participants and recorded all the testing sessions, after finish each of the participants, our team will watch the playback and assess the task success and error rate together.

From the metrics form, we could tell that for task 3 and 4, most of the users had the hard time to understand the use of feature and finish the tasks.

WHAT WENT WELL

![]()

FINDINGS

![]()

Area of Improvement #1Unexpected AI Content

![]()

Area of Improvement #2 No error recovery or version control

![]()

Area of Improvement #3 Unintuitive content editing workflow

![]()

Area of Improvement #4 Unfamiliar Terminology

![]()

Area of Improvement #5 Difficulties finding essential CTAs

![]()

Area of Improvement #6 Incomplete and unstructured script export

![]()

UX PROTOTYPES AND IMPROVEMENTS

Due to the NDA, I cannot show more details on the website. However, welcome to contact me for a portfolio deck, I will show you how we transfrom the findings to the design.

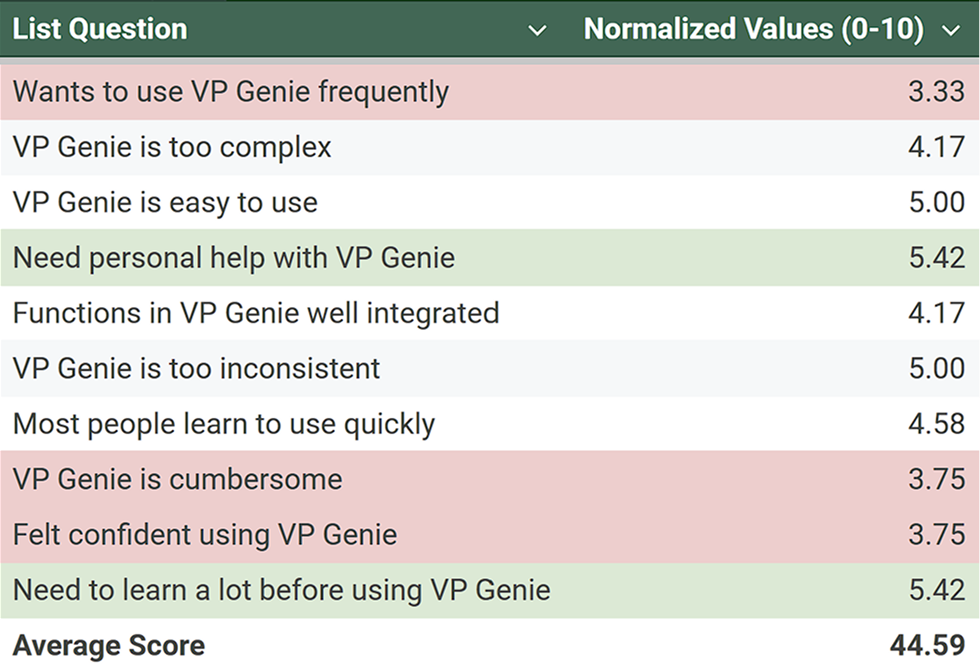

SUS

STUDY SCENARIOS

To better explore the 3 research questions (challenges, understandings and emotions), we designed an assess standards and 3 test scenarios cover most of the main features of our product to help us track user’s thoughts and actions.

Scenario 1: Create a Script from a Prompt

Overview: The participant starts with an idea written as a prompt in a Word document and uses VP Genie to generate a script, scenes, characters, locations, and props.

Overview: The participant has generated a script and now wants to edit specific characters and locations to align with their vision.

Scenario 3: Edit Storyboard

Overview: The participant refines a storyboard, making adjustments to scenes and shots.

TESTING TIMELINE

We spend 0.5pd for the pre-test, 1pd for the usability testing&observation, 2pd for the assessment and 1 pd for the post-test interview and SUS.

TASK METRICS

From the metrics form, we could tell that for task 3 and 4, most of the users had the hard time to understand the use of feature and finish the tasks.

WHAT WENT WELL

FINDINGS

Area of Improvement #1Unexpected AI Content

Area of Improvement #2 No error recovery or version control

Area of Improvement #3 Unintuitive content editing workflow

Area of Improvement #4 Unfamiliar Terminology

Area of Improvement #5 Difficulties finding essential CTAs

Area of Improvement #6 Incomplete and unstructured script export

UX PROTOTYPES AND IMPROVEMENTS

Due to the NDA, I cannot show more details on the website. However, welcome to contact me for a portfolio deck, I will show you how we transfrom the findings to the design.

SUS

Our 6 participants reported they:

Did not see themselves using VP Genie often in its current state

Did not feel confident using VP Genie

Felt VP Genie was difficult to navigate

Yet did not think they would need a lot of help to learn to use VP Genie

Did not see themselves using VP Genie often in its current state

Did not feel confident using VP Genie

Felt VP Genie was difficult to navigate

Yet did not think they would need a lot of help to learn to use VP Genie

Higher values mean participants felt more positive about VP Genie in that aspect:

![]()

TAKEAWAYS